Configure Ingestion

Data pipeline setup in DataStori is a three-step process:

- Configure Ingestion

- Scheduling and Data Load

- Destination Setup

This article covers the first step of configuring data ingestion.

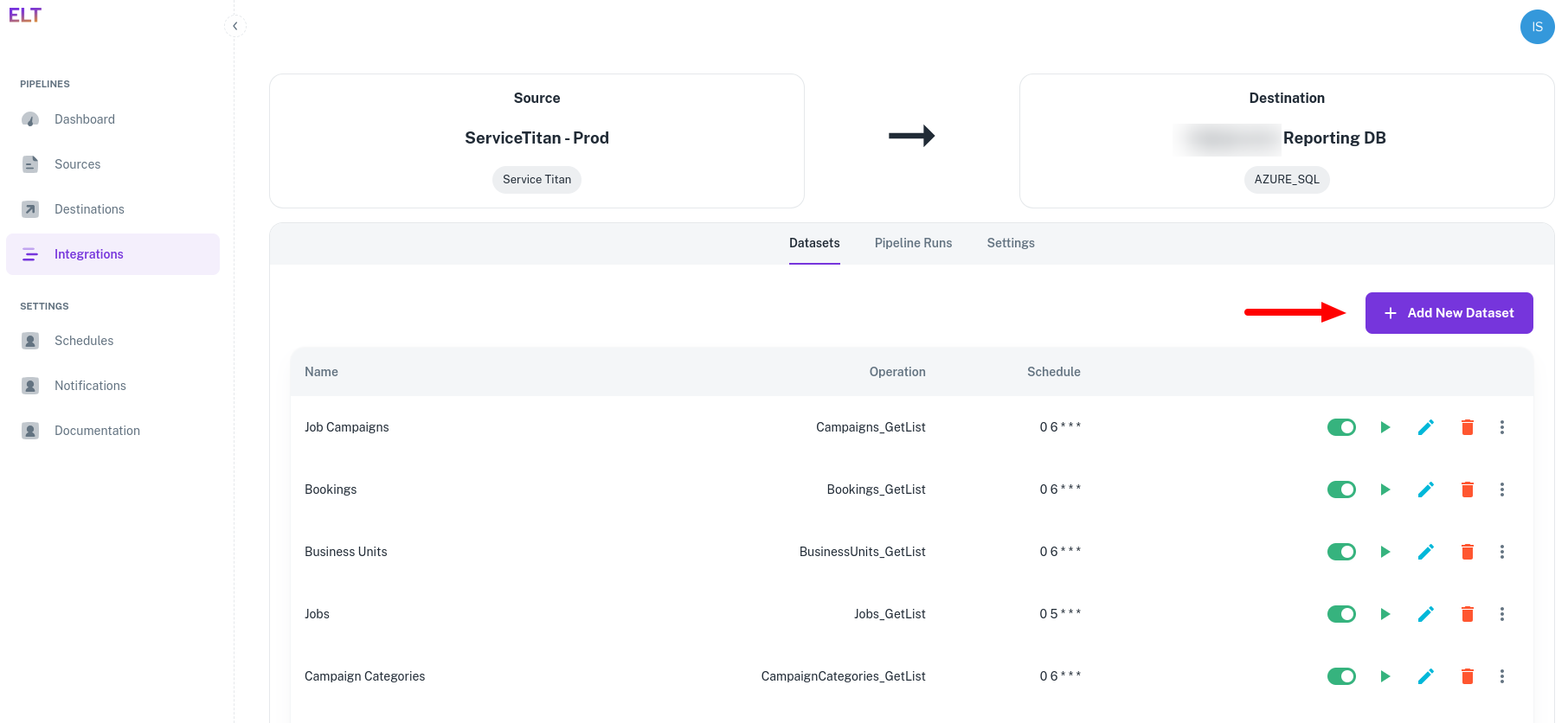

In the Integrations tab, select 'Add New Dataset'.

Based on the ingestion source type - API, email, SFTP folder, or database connection - DataStori has different configuration options.

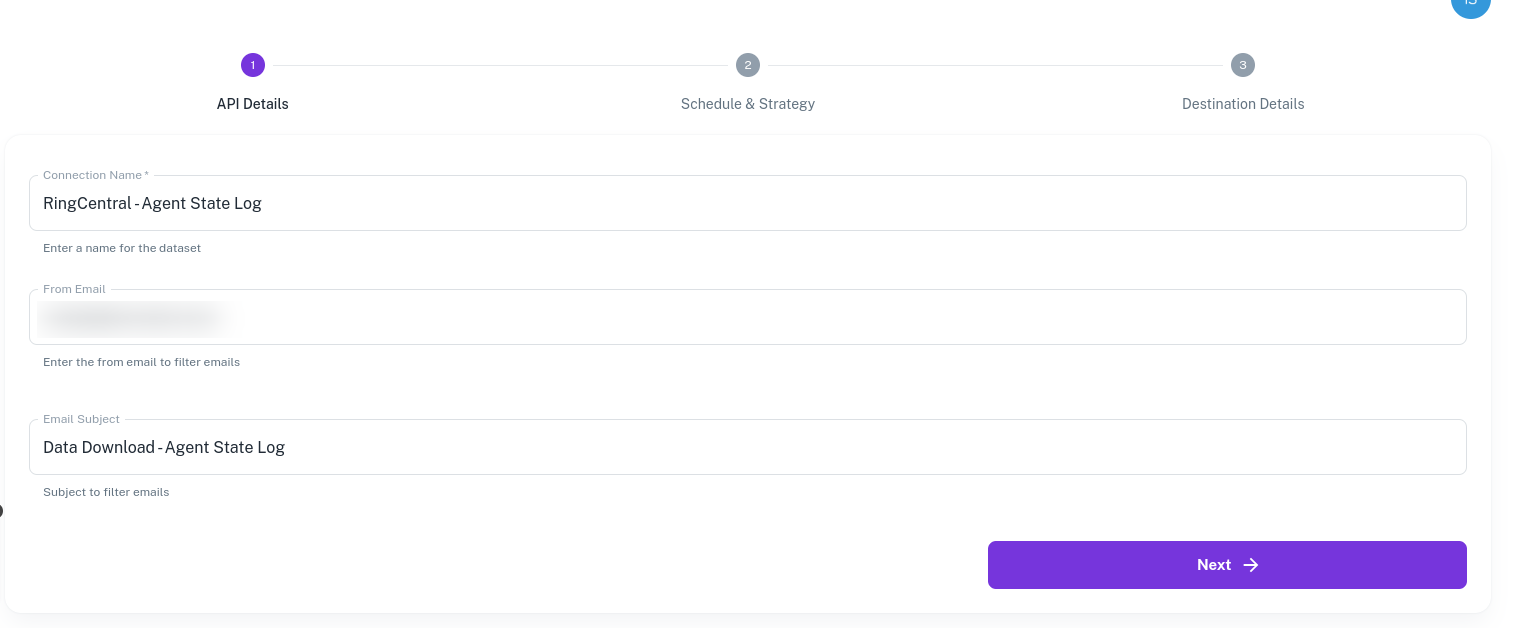

Email

In the designated inbox, DataStori filters in the emails with the defined 'From Email' ID and the subject containing the string provided in 'Email Subject'. It then downloads the CSV or Excel files attached to these filtered emails.

Only CSV and Excel (.xlsx) attachments are supported by DataStori. Excel files with formatting or macros are not supported.

API

The API Details tab has the following components:

Operation ID

Each API operation is defined using a unique id. Depending upon the dataset, select the correct operation ID. The request and response of the operation can be checked in the right half of the screen.

Pagination

If the pipeline supports pagination, select Yes and DataStori automatically loops through the pages in the application. Pagination support for the selected operation can be checked in the API Documentation listed in the right half of the screen.

Pipeline Variables

Pipeline variables are simple key-value pairs. The values can be dynamic functions that are resolved at run-time. Pipeline variables are used for:

- Defining the dynamic inputs to be passed to the pipelines. For example, to run a pipeline with yesterday's date as an input, the user can define a pipeline variable having the following value:

(datetime.date.today() - datetime.timedelta(days=1)).strftime('%m/%d/%Y')

- Defining the dynamic folder name pattern in the destination as per the user's naming preferences.

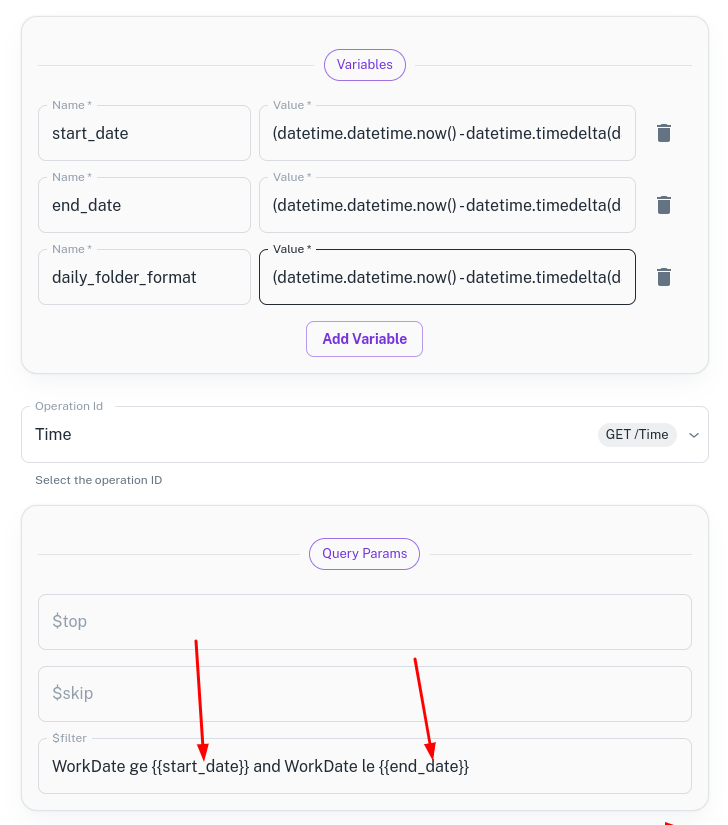

In the following example, three pipeline variables are defined for the API: dynamic start_date, dynamic end_date and daily_folder_format

Supported Functions in Variables

Pipeline variables support only datetime functions (Datetime Library documentation). Any other function resolves to an exception.

The return value of a datetime function needs be a string datatype.

Using Variables

Pipeline variables need to be enclosed in {{ }} brackets. These values are substituted and resolved when the API runs. DataStori offers users a lot of flexibility when defining datetime functions.

When using variable functions, users can append two or more variables. For example,

- {{startdate}}{{end_date}}

- daily_{{start_date}}

- employees{{start_date}}{{end_date}}_production

Examples of Datetime Functions

- Yesterday in mm/dd/yyyy format

Function: (datetime.date.today() - datetime.timedelta(days=1)).strftime('%m/%d/%Y')

Output format: 02/15/2025

- Start of last month in dd-mm-yyyy format

Function: (datetime.date.today().replace(day=1) - datetime.timedelta(days=1)).replace(day=1).strftime('%d-%m-%Y')

Output format: 01-01-2025

- End of last month in dd-mm-yyyy format

Function: (datetime.date.today().replace(day=1) - datetime.timedelta(days=1)).strftime('%d-%m-%Y')

Output format: 31-01-2025

- Start of last week in dd-mm-yyyy HH:mm:ss format, where the week starts on Monday and ends on Sunday.

Function: (datetime.datetime.now() - datetime.timedelta(days=datetime.datetime.now().weekday() + 7)).replace(hour=0, minute=0, second=0, microsecond=0).strftime('%d-%m-%Y %H:%M:%S')

Output format: '03-02-2025 00:00:00'

- End of last week in yyyy-mm-dd format

Function: (datetime.date.today() - datetime.timedelta(days=datetime.date.today().weekday() + 1)).strftime('%Y-%m-%d')

Output format: '2025-02-09'

API Parameters

On selecting the Operation ID, the available API parameters become visible in the UI.

- Pagination parameters can be skipped unless the user wants to override them with a default value.

- Any other parameter that is necessary can be provided.

- API parameters can be composed of Pipeline Variables, and the values can be dynamic in nature.

- Mandatory parameters are marked with * and their values need to be entered.

- Data fetch logic can be customized using API parameters. For example, fetch data only for active=true employees or fetch data only for employees belonging to department id = 61.

- API parameters are read from the source application's API documentation.